Hello again,

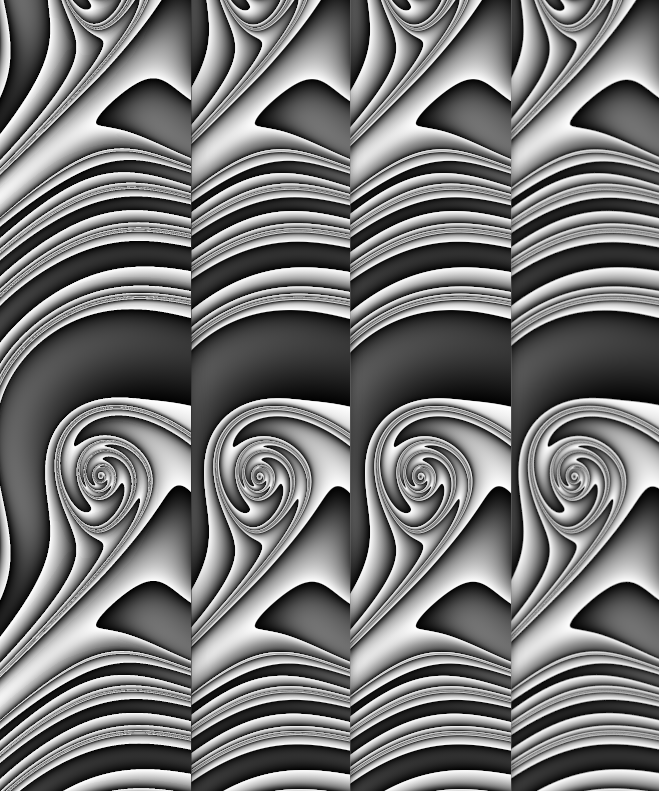

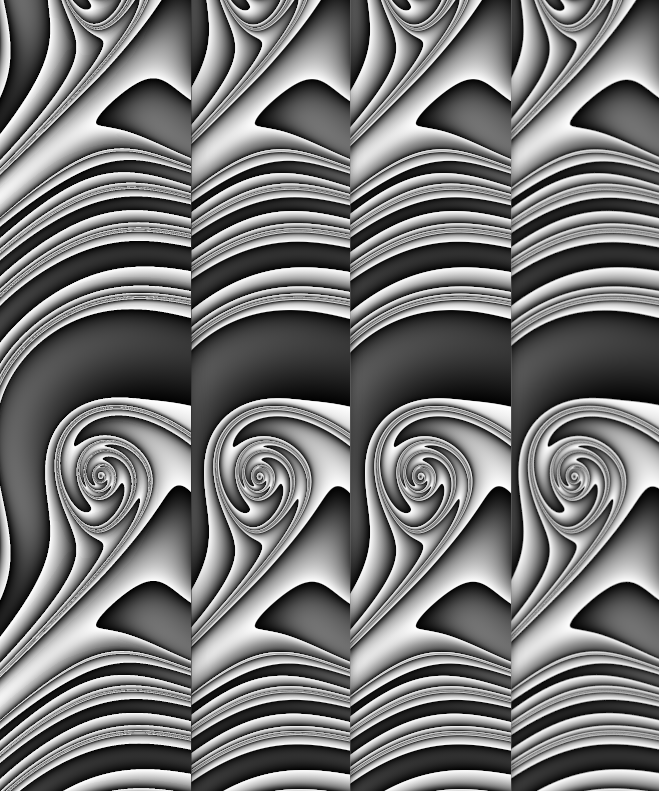

I prepared a little jupyter notebook which demonstrates how I would approach AA. Here's a quick comparison for something that is UF's current greatest weakness in my opinion: fine lines

Please make sure to view this in full size! They are a side-to-side comparison of a simple gnarl render at 800x800 pixels (see end of post for parameters).

- UF normal, took 2.06 seconds

- UF custom depth=4, subdivisions=4, took 80.19 seconds

- UF custom depth=3, subdivisions=9, took 221.66 seconds

- Python code using numpy, 55 samples, took 104.53 seconds

In principle, UF has the option to do depth=4, subdivisions=9, but this takes over 20 minutes and no matter how much better it looks, this is just way too long for the quality it provides. The first 3 images were rendered on my 3900X in parallel, the last one is running on google colab, I don't know which CPU they use, but it is certainly not spread over 12 cores and 24 threads, and it is still done faster than the 3/4 example while showing less aliasing along the lines.

The jupyter notebook also contains examples for julia/mandelbrot code and a really nasty AA-test using cos(r²) on a relatively large part of R². As far as I know, UF is written in Delphi, but the methods demonstrated in the notebook are universal and not specific to python.

Link to the notebook: https://colab.research.google.com/drive/1SV0aZdecIr-D_zxFBFdndPI-v2k3MvH5?usp=sharing

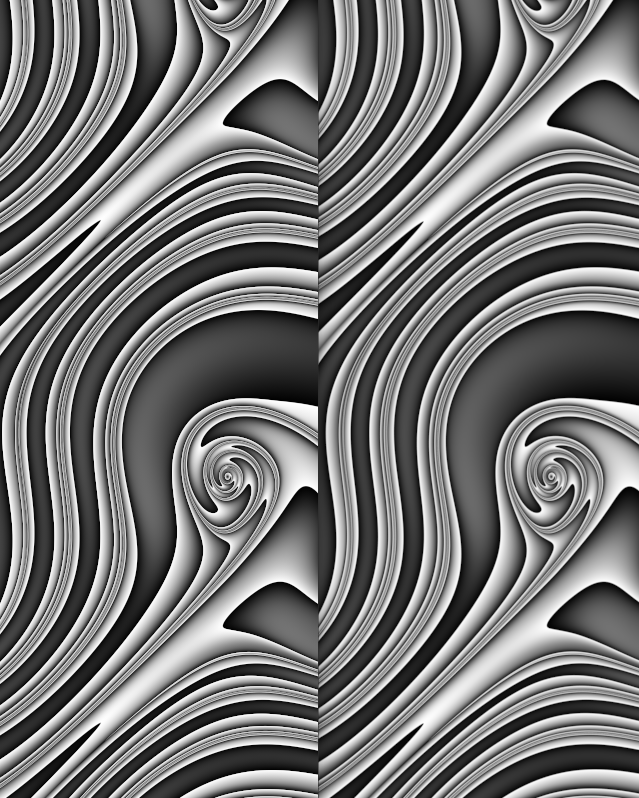

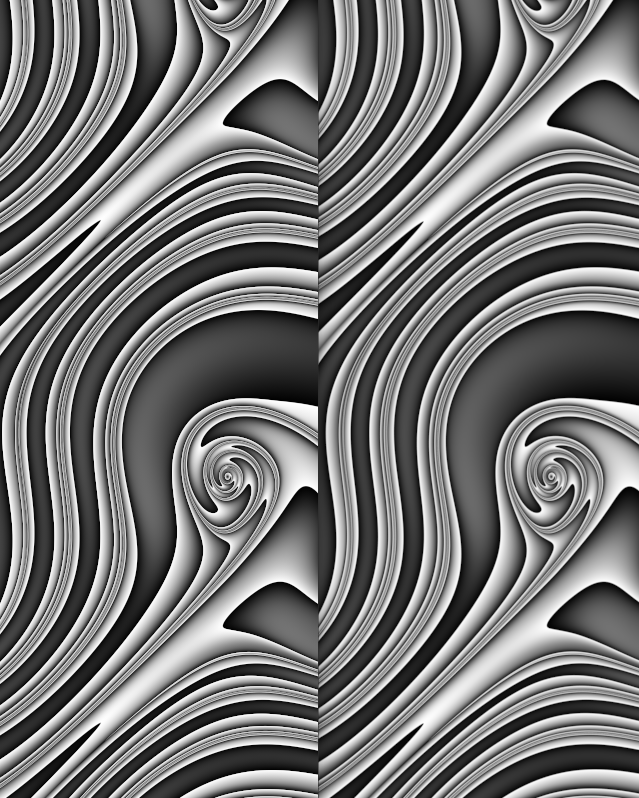

For completeness' sake, here's the same image using higher settings:

- UF custom depth=4, subdivisions=9, took 1963.67 seconds

- Python code using numpy, 233 samples, took 445.87 seconds

Admittedly, this looks OK from UF's side, but it comes at a huge computational cost and the sharp lines still don't look very clean in some places. Rendering a 4k image this way would mean to let UF render for over 6 hours for a single layer basic gnarl image, which is really costly in my opinion. Note that the method I used produces slightly less sharp images, and sharpness can also be a matter of taste of course.

Running the same method in C++ using OpenMP parallelization on dynamic scheduling (easiest method with a single pragma line) reduces the runtime for 233 samples for the same fractal and resolution down to just 10.2 seconds, 55 samples take about 2.7 seconds.

So the takeaway message is that UF seems to require a huge amount of time to hit a good result, and I think choosing the samples well could help a lot here. Of course this ultimately depends on how many samples UF can churn out per second, but I would expect it to easily beat python. I'd be happy to help, either via this forum or also via mail/calls, if you are interested.

P.S.: Parameters, for anyone who wants to try this:

__simplest_gnarl_demo {

::Jg6Etin21J1yuxNMMw7Gw/DC6DwW2Jb2kUoDNX6lcIHaPbwaTbTE9CSaRz+3HK5kiWUUfiga

4MD541IMnBzjtNCRmyGULnmSkNYwUeazBRz0Ca9Sxvol8u+elSsj02euWagrYMpHKjPHxFKn

0yX2JjhCfZsf42+R14tSB4yEYIIRuN9VM12UnsK7MEyk3plPBzvuF9XcLShPAzU+qegFxi5d

/i2exkpAk4htQIwMdMO6yYUr66VdCLs50jtNr+IDHqAswbUBxJlIgx5dc+VtfdVsSG0BWej/

WZNTdXWtyyAMhxra5ffFSSRY6nAZKWq+1x8NFGYlHG7r1j6T9qCDc9Nl+slU332QuEtgH34I

4SrsbMkDh4fYiwO7gZj8T5rm6rmNfR4FkpocOOYf2b8xnYQf3zhn4FIvLeGdb5deB8Xy/P5a

b2iwCxKcc68WLXzR2PSIHwFWKRLfaFVJKFAbhI/ktTKSWvn/Jw5FkbBfTrOYhRqVf06mHe4j

OD3d+85xhTsjOCzqm/DF/OqHPxQfHkw2TzO=

}

Gnarls.ufm:simplest_gnarls {

; Original formula otto magus

; simplified to play around with by Phillip S.

::ELS1djn2t9UTrRMIQ07DM/HGSvkkdJkslepUhQ/jsYTshBMqYcBTY/xXNCtXqX03Hz8eiAb4

w7IcQC6FHHVaEQIxp8JmeE0WrLpHt65EhXJ11HNIsXw8qc5EHTgTPXoR3A1Sbspe0drNbM7P

pv/f6xieO/YSsu/KN00S7FqjT/v+XjK3XoBE+Sya7jc5fe88jxMEhZ13yH6MZgDalo6+9Ne1

pVbBaxI96tKEWlx8aEvl+fTKT+Zdf3VqvrJLuYE3Qwp8sdmn4wuI5zJ9yVqEyk0FYrRU9ZpC

V/mrYovc6SjoMznThwPA+J7ZYA==

}

phs.ucl:GnarlAlgo {

::U9uskin2tW1yuJOMU09Ix/wVwGCVlptLrKSlpa0spLqa7yRakbiTwSO2RJmZo81P+VcCGbTr

6gEkw1XfO3X+YCjIud6EAKpckAaQitwa4qVKT586GKePcgTLkGn3Q2jpD+K+L/Ji0+NrglqF

HWhw+zr2FveF8NjnDLXj2/7OBuxRkxcATick1Clzb0xp1rc0bdLmfAuUHdZqVIlDIfn2PlVY

Md9GxsCSpdP3nzp82v/OseNMTwFIKkvFxqwy0AkPp4ZGcMBj63LAkAxuxjfHo2io+xFOO7ri

HmOpkwQ0bDx/ra+fStzHxsKxWL3zJsCZnYNcfBm1REvLL5K818S7wOcezgzGVcD8S4HsiEQ4

aULXIbgjyrssYIPKCXCfdailH26huNLLsy6cnA1Ky8ZZYOwMemdOkihw53okD97JCkes6d1G

TRx+B3g2mRDaHjZqi3lRLtWijxMM7ZUBZXns8/RiBVKtEW8fIeiWK20WtrGzEqppRNc/wZAf

wj+hRgUcMAv9PfSWU54xpUMyeE1Wh7EwLE9hkXkyA+00rMFDCKvah6rbEM7kxujcR1DiGPpk

2cwpQRqwFFEqWUSV3ooOjktPEpSoNenml3NcWFvTOhL3kTywI5WgLR7om7xEEhsaL56nMULF

eQxusDMb8dctoawqOZIzCg++KLuavMq5K3tZhv7XZMgZ7qhgy3eSxj1hSplGS5D+lhM7nETz

xFtOVyJhSQACDeEN8se4x3Yzh+cFaQ7TM/M0AnO5fsDoYyL=

}

Hello again,

I prepared a little jupyter notebook which demonstrates how I would approach AA. Here's a quick comparison for something that is UF's current greatest weakness in my opinion: fine lines

Please make sure to view this in full size! They are a side-to-side comparison of a simple gnarl render at 800x800 pixels (see end of post for parameters).

1. UF normal, took 2.06 seconds

2. UF custom depth=4, subdivisions=4, took 80.19 seconds

3. UF custom depth=3, subdivisions=9, took 221.66 seconds

4. Python code using numpy, 55 samples, took 104.53 seconds

In principle, UF has the option to do depth=4, subdivisions=9, but this takes over 20 minutes and no matter how much better it looks, this is just way too long for the quality it provides. The first 3 images were rendered on my 3900X in parallel, the last one is running on google colab, I don't know which CPU they use, but it is certainly not spread over 12 cores and 24 threads, and it is still done faster than the 3/4 example while showing less aliasing along the lines.

The jupyter notebook also contains examples for julia/mandelbrot code and a really nasty AA-test using cos(r²) on a relatively large part of R². As far as I know, UF is written in Delphi, but the methods demonstrated in the notebook are universal and not specific to python.

Link to the notebook: https://colab.research.google.com/drive/1SV0aZdecIr-D_zxFBFdndPI-v2k3MvH5?usp=sharing

For completeness' sake, here's the same image using higher settings:

1. UF custom depth=4, subdivisions=9, took 1963.67 seconds

2. Python code using numpy, 233 samples, took 445.87 seconds

Admittedly, this looks OK from UF's side, but it comes at a huge computational cost and the sharp lines still don't look very clean in some places. Rendering a 4k image this way would mean to let UF render for over 6 hours for a single layer basic gnarl image, which is really costly in my opinion. Note that the method I used produces slightly less sharp images, and sharpness can also be a matter of taste of course.

Running the same method in C++ using OpenMP parallelization on dynamic scheduling (easiest method with a single pragma line) reduces the runtime for 233 samples for the same fractal and resolution down to just 10.2 seconds, 55 samples take about 2.7 seconds.

So the takeaway message is that UF seems to require a huge amount of time to hit a good result, and I think choosing the samples well could help a lot here. Of course this ultimately depends on how many samples UF can churn out per second, but I would expect it to easily beat python. I'd be happy to help, either via this forum or also via mail/calls, if you are interested.

P.S.: Parameters, for anyone who wants to try this:

````

__simplest_gnarl_demo {

::Jg6Etin21J1yuxNMMw7Gw/DC6DwW2Jb2kUoDNX6lcIHaPbwaTbTE9CSaRz+3HK5kiWUUfiga

4MD541IMnBzjtNCRmyGULnmSkNYwUeazBRz0Ca9Sxvol8u+elSsj02euWagrYMpHKjPHxFKn

0yX2JjhCfZsf42+R14tSB4yEYIIRuN9VM12UnsK7MEyk3plPBzvuF9XcLShPAzU+qegFxi5d

/i2exkpAk4htQIwMdMO6yYUr66VdCLs50jtNr+IDHqAswbUBxJlIgx5dc+VtfdVsSG0BWej/

WZNTdXWtyyAMhxra5ffFSSRY6nAZKWq+1x8NFGYlHG7r1j6T9qCDc9Nl+slU332QuEtgH34I

4SrsbMkDh4fYiwO7gZj8T5rm6rmNfR4FkpocOOYf2b8xnYQf3zhn4FIvLeGdb5deB8Xy/P5a

b2iwCxKcc68WLXzR2PSIHwFWKRLfaFVJKFAbhI/ktTKSWvn/Jw5FkbBfTrOYhRqVf06mHe4j

OD3d+85xhTsjOCzqm/DF/OqHPxQfHkw2TzO=

}

Gnarls.ufm:simplest_gnarls {

; Original formula otto magus

; simplified to play around with by Phillip S.

::ELS1djn2t9UTrRMIQ07DM/HGSvkkdJkslepUhQ/jsYTshBMqYcBTY/xXNCtXqX03Hz8eiAb4

w7IcQC6FHHVaEQIxp8JmeE0WrLpHt65EhXJ11HNIsXw8qc5EHTgTPXoR3A1Sbspe0drNbM7P

pv/f6xieO/YSsu/KN00S7FqjT/v+XjK3XoBE+Sya7jc5fe88jxMEhZ13yH6MZgDalo6+9Ne1

pVbBaxI96tKEWlx8aEvl+fTKT+Zdf3VqvrJLuYE3Qwp8sdmn4wuI5zJ9yVqEyk0FYrRU9ZpC

V/mrYovc6SjoMznThwPA+J7ZYA==

}

phs.ucl:GnarlAlgo {

::U9uskin2tW1yuJOMU09Ix/wVwGCVlptLrKSlpa0spLqa7yRakbiTwSO2RJmZo81P+VcCGbTr

6gEkw1XfO3X+YCjIud6EAKpckAaQitwa4qVKT586GKePcgTLkGn3Q2jpD+K+L/Ji0+NrglqF

HWhw+zr2FveF8NjnDLXj2/7OBuxRkxcATick1Clzb0xp1rc0bdLmfAuUHdZqVIlDIfn2PlVY

Md9GxsCSpdP3nzp82v/OseNMTwFIKkvFxqwy0AkPp4ZGcMBj63LAkAxuxjfHo2io+xFOO7ri

HmOpkwQ0bDx/ra+fStzHxsKxWL3zJsCZnYNcfBm1REvLL5K818S7wOcezgzGVcD8S4HsiEQ4

aULXIbgjyrssYIPKCXCfdailH26huNLLsy6cnA1Ky8ZZYOwMemdOkihw53okD97JCkes6d1G

TRx+B3g2mRDaHjZqi3lRLtWijxMM7ZUBZXns8/RiBVKtEW8fIeiWK20WtrGzEqppRNc/wZAf

wj+hRgUcMAv9PfSWU54xpUMyeE1Wh7EwLE9hkXkyA+00rMFDCKvah6rbEM7kxujcR1DiGPpk

2cwpQRqwFFEqWUSV3ooOjktPEpSoNenml3NcWFvTOhL3kTywI5WgLR7om7xEEhsaL56nMULF

eQxusDMb8dctoawqOZIzCg++KLuavMq5K3tZhv7XZMgZ7qhgy3eSxj1hSplGS5D+lhM7nETz

xFtOVyJhSQACDeEN8se4x3Yzh+cFaQ7TM/M0AnO5fsDoYyL=

}

````